Minimal curling for HTTP headers

This is a short tutorial on a few more of curl's options, as well as an introduction to some of the metadata of Web interactions: HTTP headers.

The technical takeaway

Sometimes we don't want to actually fetch a full webpage, we just want to fetch its headers, i.e. the metadata relating to the web request for the page:

curl -I http://t.co/d

Output:

HTTP/1.1 301 Moved Permanently

cache-control: private,max-age=300

content-length: 0

date: Fri, 16 Jan 2015 01:33:21 UTC

expires: Fri, 16 Jan 2015 01:38:21 GMT

location: https://twitter.com/dhelder

server: tsa_a

set-cookie: muc=4acf9263-ddba-45f7-a262-3982bf22e1a2; Expires=Wed, 28 Dec 2016 01:33:21 GMT; Domain=t.co

x-connection-hash: 44d8010b2aae46c00574add8b43e4c0d

x-response-time: 5

To have curl automatically follow all re-directs to the final destination:

curl -L http://t.co/d

Combining -L and -I will fetch all the headers for all the intermediary and final URLs

curl -IL http://t.co/d

Use the --write-out (or -w for short) option to output customized data (note, the full syntax is too difficult to memorize, so consult the curl docs for the --write-out option):

user@host:~$ curl http://t.co/d \

-w "Status code: %{http_code}; Redirects to %{redirect_url}\n"

Status code is: 301; Redirects to https://twitter.com/dhelder

Thinking about redirects

Since the Twitter messaging service only allows 140 characters per message, sending full URLs limited the number of actual message that a Twitterer could tweet. So URL shorteners, such as bit.ly, offered a service in which a shortened URL:

bit.ly/cow (10 characters)

– would redirect to a full URL:

http://www.amazon.com/Purple-Cow-Transform-Business-Remarkable/dp/159184021X/ref=sr_1_1?ie=UTF8&s=books&qid=1224641119&sr=8-1 (126 characters)

This (usually) happens so fast that we don't even perceive that it's happening. Is there actually a "webpage" at bit.ly/cow? Or is it just some kind of mirage? And if it were just a mirage, does that mean nothing bad happens if bit.ly goes down?

Twitter has its own URL shortener service – t.co – which automatically wraps any URL that you tweet, even if it's already shortened. Among other conveniences, this allows Twitter to track the clickthroughs of those URLs.

Try visiting http://t.co/d in your browser. If your Internet is working properly, it might seem that http://t.co/d is essentially non-existent - your browser immediately shows the target of that short URL: https://twitter.com/dhelder

So does the t.co domain exist? One easy way to find out is to use the whois program to see if it's listed on the IANA Registry database:

whois t.co

# Output:

# Domain Name: T.CO

# Domain ID: D740225-CO

# Sponsoring Registrar: CSC CORPORATE DOMAINS

# Sponsoring Registrar IANA ID: 299

# Registrar URL (registration services): whois.corporatedomains.com

# Domain Status: clientTransferProhibited

# ...

So t.co does exist. Let's use curl to see how web requests work at a more granular level.

Basic curl of t.co

Run curl t.co and see what content lives at http://t.co/d

curl http://t.co/d

You shouldn't see any output…which is what we might see if a page existed, but happened to be completely empty.

So we can use the --head option of curl (or -I for short)

According to the documentation, this option does the following:

(HTTP) Include the HTTP-header in the output. The HTTP-header includes things like server-name, date of the document, HTTP-version and more.

curl -I http://t.co/d

The output:

HTTP/1.1 301 Moved Permanently

cache-control: private,max-age=300

content-length: 0

date: Fri, 16 Jan 2015 01:19:14 UTC

expires: Fri, 16 Jan 2015 01:24:14 GMT

location: https://twitter.com/dhelder

server: tsa_a

set-cookie: muc=94b7153f-a0df-4948-82e2-a15beeff0244; Expires=Wed, 28 Dec 2016 01:19:14 GMT; Domain=t.co

x-connection-hash: 14937796fe343c1e4cff96a8f2658bbe

x-response-time: 5

Without knowing anything about the underpinnings of the Hypertext Transfer Protocol, a few lines of that output should stick out:

HTTP/1.1 301 Moved Permanently- seems to indicate that whatever is att.co/dhas "moved permanently"content-length: 0- makes sense given that we get nothing when curling for the webpage att.co/dlocation: https://twitter.com/dhelder- this field seems to be what points to wheret.co/dsends the browser off to.

So let's try curling the headers for https://twitter.com/dhelder:

curl -I https://twitter.com/dhelder

You'll see a much fuller set of headers, starting with:

HTTP/1.1 200 OK

cache-control: no-cache, no-store, must-revalidate, pre-check=0, post-check=0

content-length: 254699

...

Auto-following redirects

As we saw above, by default, curl doesn't automatically follow redirects. So if you target a URL that, unbeknownst to you, has "moved permanently", your curl command will not fetch what you expected. For most automated web-scraping scenarios, we want curl to keep going until we reach the "final" destination of a redirected URL. There's an option for that: -L

curl -L http://t.co/d

Auto-following the headers

If you want to follow the redirects but just want the headers, then you can combine -I and -L:

user@host:~$ curl -I -L http://t.co/d

HTTP/1.1 301 Moved Permanently

cache-control: private,max-age=300

content-length: 0

...

HTTP/1.1 200 OK

cache-control: no-cache, no-store, must-revalidate, pre-check=0, post-check=0

content-length: 254651

Formatting the header information

Check out the curl manpage, specifically for the option:

-w, --write-out <format>

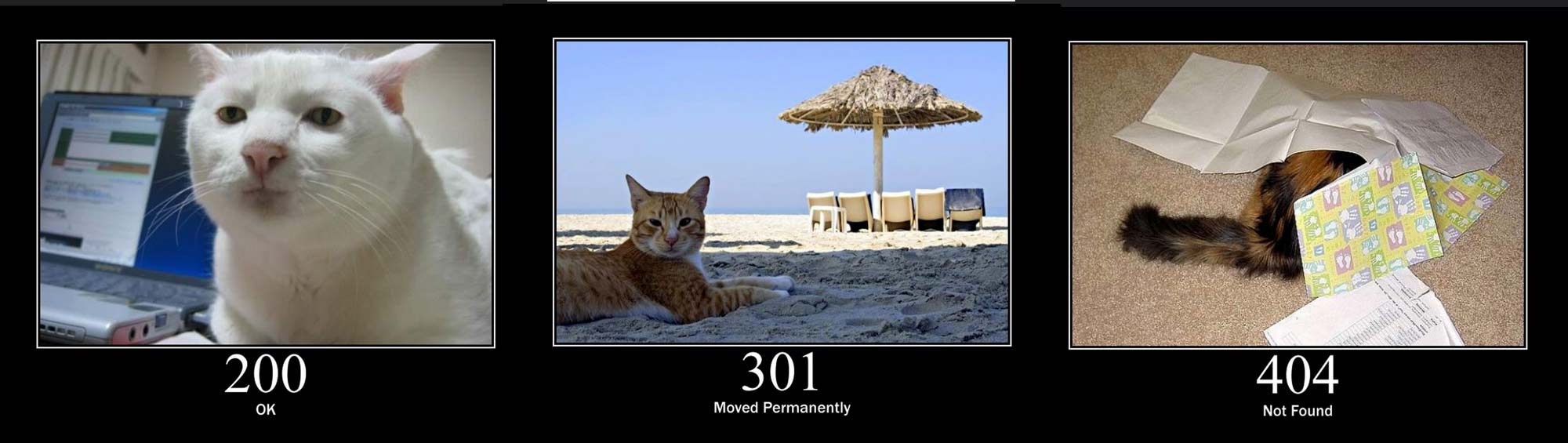

You'll see a variety of ways to have curl customize the output of the header information. For example, if we just wanted to see the HTTP code, (i.e. 200 for an OK site, 404 for a broken one):

user@host:~$ curl http://t.co/d -w '%{http_code}\n'

301

user@host:~$ curl http://t.co/d -w '%{redirect_url}\n'

https://twitter.com/dhelder

user@host:~$ curl -s https://twitter.com/dhelder \

> -w '%{http_code}\n' -o /dev/null

Note: The use of -o /dev/null convention tells curl to dump the webpage it downloaded into what's effectively the trash chute of Unix: /dev/null. Anytime you don't want the output of a program either seen or saved to file, you send it to /dev/null