Pipes and filters

The concept of filters is one of the most powerful programming paradigms we'll use, both in thinking of our function as consumers and providers of information in the "real" world, and in thinking in how information is processed at the programmatic level.

If you accept the Unix philosophy that programs should be done

Text filters

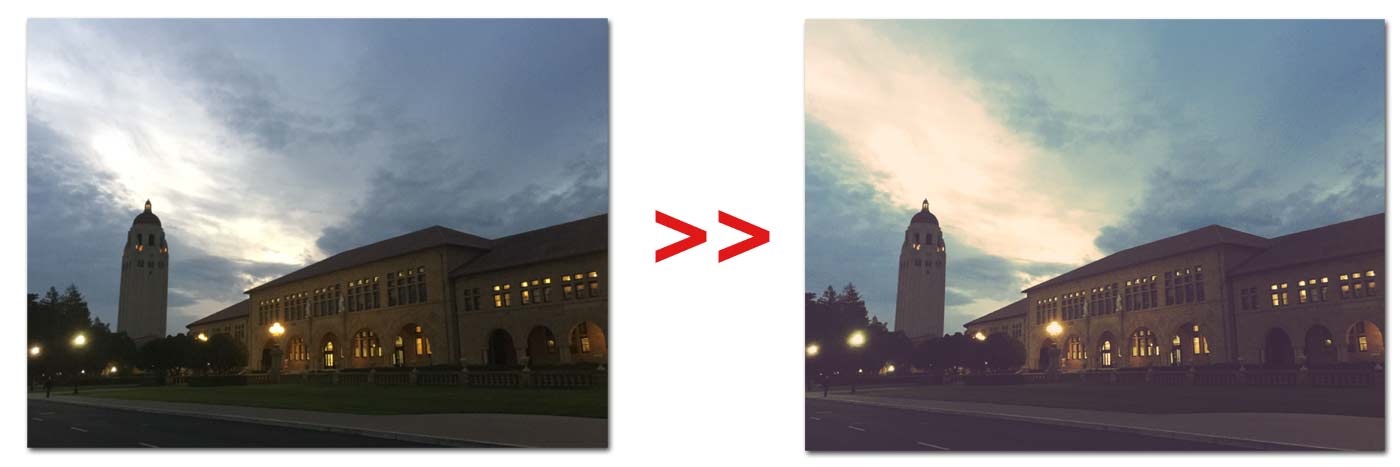

It's not wrong to think of filters in the way that they're used on Instagram or Twitter:

In the example below, the original image is passed through the "Golden" filter to produce a new image:

Similarly, in Unix, many of the programs act as filters on text, taking text input and creating a new version of that text.

Let's take an excerpt from Hamlet and put it into a file named ham.txt:

To be, or not to be: that is the question:

Whether 'tis nobler in the mind to suffer

The slings and arrows of outrageous fortune,

Or to take arms against a sea of troubles,

And by opposing end them? To die: to sleep;

No more; and by a sleep to say we end

The heart-ache and the thousand natural shocks

That flesh is heir to, 'tis a consummation

Devoutly to be wish'd. To die, to sleep;

To sleep: perchance to dream: ay, there's the rub;

For in that sleep of death what dreams may come

Unnecessary usage of cat

By now, you should be familiar with the use of the cat command to print the contents of a file (or several files):

cat ham.txt

For this lesson, I'll be using cat at the beginning of each "pipeline" to emphasize the concept of text being "piped" from one command (starting with cat) to another:

cat ham.txt | head -n 2

cat ham.txt | grep 'the'

However, many of the text-processing programs can open files themselves:

head -n 2 ham.txt

grep 'the' ham.txt

So invoking cat is kind of unnecessary. In fact, there's a class of geeky award called the "Useless Use of Cat Award" to call out such unnecessary invocations. However, for this tutorial, I'll stick with cat-ing files into the other Unix tools so that you can understand that each example starts with a stream of text from ham.txt.

head and tail

The head and tail commands will filter a given text stream and return the first or last n lines, respectively.

The first 3 lines of ham.txt:

cat ham.txt | head -n 4

To be, or not to be: that is the question:

Whether 'tis nobler in the mind to suffer

The slings and arrows of outrageous fortune,

Or to take arms against a sea of troubles,

The last 2 lines of ham.txt:

cat ham.txt | tail -n 2

To sleep: perchance to dream: ay, there's the rub;

For in that sleep of death what dreams may come

Combining head and tail

Let's see what happens when we combine head and tail:

cat ham.txt | head -n 4 | tail -n 2

What gets returned? The last 2 lines of the first 4 lines of ham.txt

The slings and arrows of outrageous fortune,

Or to take arms against a sea of troubles,

Let's go through that transformation step-by-step:

cat ham.txt |

head -n 4 |

tail -n 2 |

|---|---|---|

| To be, or not t… | To be, or not t… | |

| Whether 'tis no… | Whether 'tis no… | |

| The slings and … | The slings and … | The slings and … |

| Or to take arms… | Or to take arms… | Or to take arms… |

| And by opposing… | ||

| No more; and by… | ||

| The heart-ache … | ||

| That flesh is h… | ||

| Devoutly to be … | ||

| To sleep: perch… | ||

| For in that sle… |

Key concepts of filters

Filtering data from one program to another is one paradigm of data programming. So even if you're a programmer (especially in Java), it may not be obvious what's going on here, especially how the original source of the data is itself unchanged in this pipeline.

Again, let's look at this command:

cat ham.txt | head -n 4 | tail -n 2

- The original data, i.e.

ham.txt, does not get changed. By the time text is streaming fromcat ham.txt, we're done with that file, for the purposes of this pipeline. To reiterate,headandtail, nor any other filter-like program, do not change the contents ofham.txt - So by the time the text stream reaches the

tailcommand, for alltailknows, the text originated from the previousheadcommand. In fact, by the timeheadsees the text stream, it doesn't think of it as coming from a file, it thinks of the text as just the text output fromcat. In other words, neitherheadnortailhave any "knowledge" ofham.txt. That reinforces the first point, of howheadnortailaffectham.txt

The upshot of all this is that cat, head, and tail all can act independently of each other. But they can all "talk" to each other through a common "interface": text.

This is one of the killer features of Unix, and how complicated and powerful data tasks can be done with such simple tools, because you, the programmer, have flexibility to specify exactly what you want, through the combination and arrangement of these simple tools.

A quick exercise: what do you expect the output will be when switching the order of head and tail from the previous example?

cat ham.txt | tail -n 4 | head -n 2

Think through the answer, run the command yourself to see what it produces.

A common mistake when starting out with pipelines is to interpret the command as returning the last 4 lines and the first 2 lines of ham.txt.

But as I said: tail and head don't know about ham.txt, nor do they know about what was in ham.txt. The tail command just knows what cat spit at it, and likewise, head just knows what came out of tail.

Hence, why we end up with just two lines of output:

cat ham.txt |

tail -n 4 |

head -n 2 |

|---|---|---|

| To be, or not t… | ||

| Whether 'tis no… | ||

| The slings and … | ||

| Or to take arms… | ||

| And by opposing… | ||

| No more; and by… | ||

| The heart-ache … | ||

| That flesh is h… | That flesh is h… | That flesh is h… |

| Devoutly to be … | Devoutly to be … | Devoutly to be … |

| To sleep: perch… | To sleep: perch… | |

| For in that sle… | For in that sle… |

Replacing characters with tr

Let's try a new filter: tr

The tr tool, short for "translate", can be used to replace one character with another from a given text stream.

To replace all the lowercase t characters in ham.txt with x, we can pipe the output of cat (which simply prints the contents of a file) into tr, like so:

cat ham.txt | head -n 3 | tr 't' 'x'

To be, or nox xo be: xhax is xhe quesxion:

Whexher 'xis nobler in xhe mind xo suffer

The slings and arrows of ouxrageous forxune,

Changing case

The tr command is limited to changing one character for the other. However, it does accept character classes, that is, types of characters to translate.

For example, to change all of the lower-case characters to upper-case:

cat ham.txt | head -n 3 | tr '[:lower:]' '[:upper:]'

TO BE, OR NOT TO BE: THAT IS THE QUESTION:

WHETHER 'TIS NOBLER IN THE MIND TO SUFFER

THE SLINGS AND ARROWS OF OUTRAGEOUS FORTUNE,

The tr command also has the -d option for deleting a given character or character class.

The following example deletes all punctuation from the first three lines of ham.txt:

cat ham.txt | head -n 3 | tr -d '[:punct:]'

To be or not to be that is the question

Whether tis nobler in the mind to suffer

The slings and arrows of outrageous fortune

An application of filters

Transforming and filtering lines of text is fun, but usually we want to end up with some kind of analysis or action.

Create a list of 10 words in ham.txt, listed by frequency of occurrence (regardless of capitalization and punctuation), in descending order, e.g.:

10 dog

8 cat

4 orange

2 apple

- You need a way to cut a list down to 10 items

- You need a way to put each word on a separate line

- You need a way to remove punctuation

- You need a way to change all the words to a common case, so that "The" will be counted with "the"

- You need a way to sort a list

- You need a way to count the number of occurrences of a word

In the class textbook, "Data Science at the Command Line", the program will be similar to the toy example in "Chapter 4: Creating Reusable Command-Line Tools"

Try figuring it out yourself. Then look at the answer below. If it doesn't make sense, try running it step by step.

cat ham.txt \

| tr '[:upper:]' '[:lower:]' \

| tr -d '[:punct:]' \

| tr ' ' '\n' \

| sort \

| uniq -c \

| sort -rn \

| head -n 10

Why pipes?

If you did each step, one at a time, you could infer that it's possible to save a interim file after each action.

For example:

cat ham.txt | tr '[:upper:]' '[:lower:]' > step1.txt

cat step1.txt | tr -d '[:punct:]' > step2.txt

# ...and so forth

This works just fine but it a bit cumbersome, especially the part where you have a lot of interim files that need to be deleted afterwards. But the concept is the same: each step creates a new transformation of the input text, regardless of whether you save that to a temporary file, or pipe it directly to a new program.

Resources

If you like videos, Software Carpentry has a nice tutorial on pipes and filters:

Check out their written guide.