Death Row rows parsing

This is another exercise in HTML and plaintext (well, and PDF, too) parsing. It will involve compiling death row data from three different state criminal justice institutions:

- Texas Department of Criminal Justice Death Row

- Texas List of All Offenders on Death Row 1923-1973

- Florida Death Row Roster

- Florida Execution List, 1976 - present

- Florida Execution List, 1924 - 1964

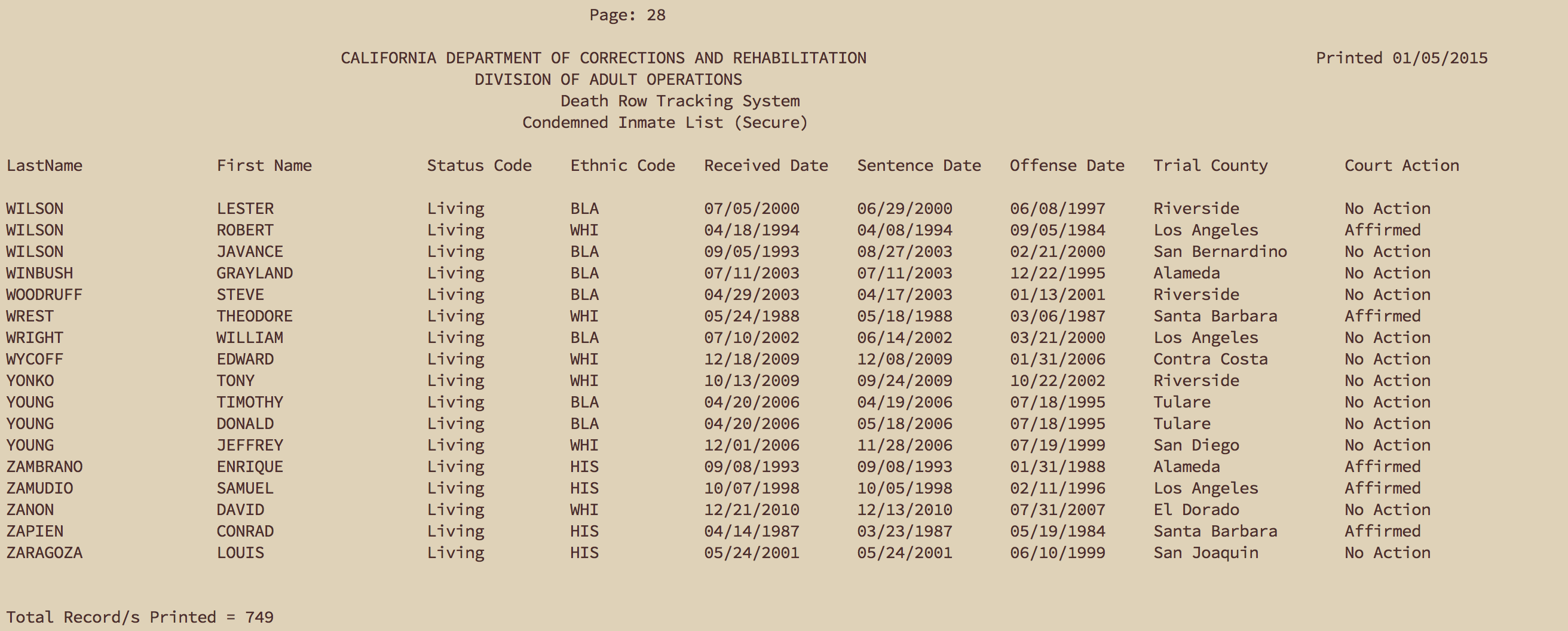

- California Condemned Inmate List

This is an extension of the HTML web scraping exercise here (the first part of it, not the rambling parts after that).

The HTML and text parsing, you already know how to do. PDF parsing, at least in this case, is not much different. And now you can say you’ve had some experience in the fun field of working with and coordinating datasets from different jurisdictions.

This exercise will extract the Race/Ethnicity data from each data source and in the final step, will print to stdout the combined data from each data source. In other words, the total-dr.sh script will run the three other scripts, process and standardize their taxonomy, and output a text stream suitable to pass into another filter (to do a count by state/race, for example):

CA,White

CA,Black

CA,Hispanic

TX,Other

TX,White

FL,Black

FL,White

So if the concepts of separating concerns (between different scripts), stdin/stdout, and shell scripting weren’t clear to you now, they will be after this exercise. Also, you’ll get to examine the data produced by parts of our criminal justice system. This exercise involves extracting the race/ethnic column because it’s the least difficult to parse across all the different data sources, even though the taxonomy is different.

Deliverables

A project folder named "death-row-parsing"

In your compciv repo, create a folder named:

homework/death-row-parsing

This is where your code will exist.

The script, "helper.sh"

The helper.sh script will download the following files to the data-hold subdirectory for your other scripts to use:

The script, "tx-dr.sh"

The tx-dr.sh script should parse the two Texas death row pages and when run, should output the Race column, as collected from both pages as a text stream, e.g.

White

White

White

Black

Hispanic

Black

Other

The exact code to do it for the current death row listing can be found in the Scraping the Texas death row list tutorial. The code to extract the data from the 1923-1973 page should be pretty similar.

The script, "fl-dr.sh"

The fl-dr.sh script should parse the three Florida death penalty pages and output the “Race/Gender column”, as collected from the three pages as a text stream, e.g.

BM

BM

WM

OM

The script, 'ca-dr.sh'

The ca-dr.sh script should parse the California condemned inmate list and output the “Ethnic Code” column as a text stream, e.g.

WHI

BLA

HIS

OTH

The script, `total-dr.sh`

The total-dr.sh script should run the other three scripts and produce a text stream:

CA,White

CA,Black

CA,Hispanic

TX,Other

TX,White

FL,Black

FL,White

The order of the data doesn’t matter, but what does matter is that the terms used for race are standardized in the output. That is, even though each state uses different terms, the total-dr.sh should produced a standardized output using these terms:

- Black

- Hispanic

- Other

- White

Hints

The parsing here is no more difficult than what you've seen in past exercises, including the initial pup tutorial and the White House briefings homework. However, there's enough distinct, moving parts that you should probably not wait until the last day to start this.

The most unfamiliar part of this assignment will be figuring out what total-dr.sh is supposed to do. Before you worry about it, ask yourself this: Have I finished the three other state scrapers so that they output exactly what was asked for? If not, then review this clip from The Wire (below), read the Wikipedia article on Separation of concerns, and finish those three scrapers before worrying about total-dr.sh:

Remember stdout

Again, if you don't understand the concept of stdout, of having programs print to standard output so that other programs (in this case, total-dr.sh) can use their output, then re-read this brief primer on the concept.

Each of the three state-focused scripts focus on extracting and outputting the raw data from their respective sources. They don't have to worry about anything than doing their data-extraction job and printing/echoing to stdout. They let total-dr.sh take care of making the terms uniform (i.e. converting WM to White), and so forth.

This means that total-dr.sh will itself be calling each of the three scripts, reading their output, and translating/converting as necessary to produce the desired stream:

CA,White

CA,Black

CA,Hispanic

TX,Other

TX,White

FL,Black

FL,White

If you run the output of total-dr.sh through the filters we've used before, you should get answers similar to this:

# Showing the broken down totals for each state

user@host:~$ bash total-dr.sh

272 CA,Black

178 CA,Hispanic

40 CA,Other

256 CA,White

312 FL,Black

16 FL,Hispanic

3 FL,Other

348 FL,White

399 TX,Black

122 TX,Hispanic

5 TX,Other

253 TX,White

# Showing the totals across three states

user@host:~$ bash total-dr.sh | cut -d ',' -f 2 | sort | uniq -c

983 Black

316 Hispanic

48 Other

857 White

The damn

In some of your scrapes, you may notice a character in the raw HTML that is denoted as …which is invisible when viewing it via browser or in the command-line Terminal. This is a non-breaking space…there's not much reason to go into it except it can often be a severe pain in the ass. My advice…if it keeps coming up, that you filter for text values that contain at least one alphabetical character, i.e.:

cat whatever.html | pup '.whatever' | grep '[[:alpha:]]'

However, you can also address the character with this literal string, and filter it out using the -v inverse filter (-P is a flavor of regex that is necessary here):

cat whatever.html | pup '.whatever' | cat grep -vP '\xc2\xa0'

About tx-dr.sh

You should probably finish this first, as the parsing code will be very similar to the one found in this tutorial I've written. You'll just be running it twice (one for each of the Texas pages). The Texas website also has the racial statistics posted, so you can use them to double-check your work

About fl-dr.sh

There are three pages here, but the concept is the same as it is for tx-dr.sh, with the data being inside a HTML table with a "Race/Gender" column. You may be wondering what to do with the gender information after you've extracted it: again, that's for total-dr.sh to worry about.

About ca-dr.sh

This is kind of a trick question: this list isn't HTML, it's a PDF. You may be wondering, "well, how do I turn a PDF into text?", but I'll leave that to you and Google.

Suffice to say, the recommended tool is already installed on corn.stanford.edu, and if you're using the one that I think is the most obvious to use, look at its help page, and one of its options will allow you to convert the PDF into text in a format that looks like this:

And at that point, it's just using good ol' grep and a regular expression to extract the terms relating to race.

If you find the pattern difficult to isolate in a single grep, I would highly suggest using two grep calls, based on the observation that, according to the data in that PDF, there is one common physical aspect about every inmate whose race is listed.

About total-dr.sh

If you've made it this far, congratulations. This means that the other three scripts, when run, are outputting their extracted race data to standard output.

This means that total-dr.sh will run each script, and then perform the necessary translation needed to, for example, in the case of California, convert OTH to Other, and so forth.

The four racial categories that are common to all three states are:

- Black

- Hispanic

- Other

- White

The total-dr.sh will produce a stream consisting of those terms and the state from which each data row was produced, e.g:

CA,Black

CA,Hispanic

CA,Other

CA,White

...

TX,Other

TX,White

Since total-dr.sh is running each of the scripts, and controlling which each script outputs, it also can output the state identifier (and the comma separator) with each line.

Using sed

We haven't formerly covered sed, but I think it will be by far the easiest way to convert the output. sed is a big program, but you should only need to use its immensely useful substitution command.

Think of sed as a much more flexible and powerful version of the tr tool, as tr can only convert one kind of character at a time.

Here's sample usage:

user@host:~$ echo 'WM' | sed 's/W/White/'

WhiteM

Don't be afraid to use as many sed filters in succession as you need for filtering out a stream:

user@host:~$ echo 'WM' | sed 's/M//' | sed 's/W/White/'

White

You can also take advantage of regular expressions with sed, though I think the text you need to translate is simple enough that you can stick with just translating literal characters.

Find more examples of sed and substitution here.

Again, it can't be emphasized enough that you'll want to do things step-by-step_, and to understand that what makes the logistics here possible (or at least, relatively simple) is the idea of text as a universal interface.

Solution

You can see a copy of the solution on Github

The helper.sh file is in charge of preparing all of the data, including downloading it, such that none of the other scripts should involve a curl or anything other than text/html-processing tools:

mkdir -p data-hold

# Download Texas

curl -s http://www.tdcj.state.tx.us/death_row/dr_offenders_on_dr.html > data-hold/tx_dr.html

curl -s http://www.tdcj.state.tx.us/death_row/dr_list_all_dr_1923-1973.html > data-hold/tx_dr_past.html

# Download Florida

curl -s http://www.dc.state.fl.us/activeinmates/deathrowroster.asp > data-hold/fl_dr_roster.html

curl -s http://www.dc.state.fl.us/oth/deathrow/execlist2.html > data-hold/fl_dr_list2.html

curl -s http://www.dc.state.fl.us/oth/deathrow/execlist.html > data-hold/fl_dr_list.html

# Download California data

curl -s http://www.cdcr.ca.gov/capital_punishment/docs/condemnedinmatelistsecure.pdf > data-hold/ca_dr.pdf

# Convert the California data into a PDF

pdftotext -layout data-hold/ca_dr.pdf data-hold/ca_dr.txt

The tx-dr.sh script is pretty straightforward and follows the same strategy outlined in the HTML-parsing tutorial here. The two different pages simply list race in a different column:

cat data-hold/tx_dr.html | pup 'td:nth-of-type(7) text{}'

cat data-hold/tx_dr_past.html | pup 'td:nth-of-type(4) text{}'

The fl-dr.sh script involves the same kind of pup call across all three pages (thus, I'm able to cat them all together), though because there are extra rows (corresponding to multiple-crimes per inmates), we have to use a grep to remove columns that don't have upper-case characters in them. The following is actually just a one-line command involving cat, pup, and grep, though I've split it up via backslash:

cat data-hold/fl_dr_list2.html \

data-hold/fl_dr_list.html \

data-hold/fl_dr_roster.html | \

# grab the text of the third column

pup 'td:nth-of-type(3) text{}' | \

# match only the lines that have uppercase ltters in them

# as some columns contain , i.e. the blank non-breaking spaces

grep -Eo '\b[[:upper:]]{2}\b'

The ca-dr.sh script does not use pup, because after the pdftotext conversion, the data is just plain text, not HTML.

You could extract the text successfully by just using grep a couple of times, as well as remembering the purpose of regular expressions: to describe patterns in text. Please continue to remember and review them, as they will make your life much, much easier in any kind of text task you will ever encounter in the real world (which will be many occasions):

cat data-hold/ca_dr.txt | \

# Cut off everything before "Living"

grep -oE 'Living.+' | \

# Grab only boundedthree-capital letters

grep -oE '\b[A-Z]{3}\b'

As you should've noticed by now, the other three scripts just care about printing text to standard output. This leaves the total-dr.sh script to call each of them and modify the text as needed:

# Printing Texas

# There is one substitution necessary, as there was one inmate

# classified as "Native American"

bash tx-dr.sh | sed -E 's/Native +American/Other/' | \

# the carat (not inside of brackets) denotes the beginning of the line

# so I'm effectively saying, substitute "TX," at the beginning of each line

sed s/^/TX,/

# Printing Florida

# since we're discarding gender, we just need to substitute based

# on the first character

bash fl-dr.sh | sed s/B./Black/ | sed s/W./White/ | \

sed s/H./Hispanic/ | sed s/O./Other/ | \

sed s/^/FL,/

# Printing California

bash ca-dr.sh | sed s/WHI/White/ | sed s/BLA/Black/ | \

sed s/HIS/Hispanic/ | sed s/OTH/Other/ | \

sed s/^/CA,/

Common mistakes

Not everything is HTML

Remember that each parsing tool we've learned, including jq and pup, are meant for a specific text format, such as JSON or HTML. When you are dealing with text that does not follow the format, then you do not use those tools. Those are not tools for text in the way that grep is; they are tools for parsing.

Not separating concerns

The purpose of the helper.sh is to download things once, so that the other scripts can happily focus on the parsing/printing parts of their jobs. So if your helper.sh has curled the appropriate files, then the individual parsing scripts should not repeat the process. Similarly, total-dr.sh should not have any calls to pup – total-dr.sh expects only to deal with plaintext by the time it goes into action.

Repeating yourself

Inside total-dr.sh:

bash tx-dr.sh | sed s/Black/TX,Black/ | \

sed s/White/TX,White/ | \

sed s/Hispanic/TX,Hispanic/ | \

sed s/Other/TX,Other/

Remember that being able to express yourself in patterns is one of the superpowers of programming. You just scraped two pages containing 750+ data points with just two lines of code because you were able to exploit a pattern…you should be indignant at the thought of having to copy-paste that sed command four times just to insert the damn TX, in front of each line. This assignment was only a toy example; imagine if the assignment was to get inmate listings for all 50 states (or at least the ones that still have capital punishment) - the probability that you'd be able to type out all those sed commands without making a mistake is very slim.

Learn to express your actions in the most minimal way possible. It's not that your goal is to replace White with TX,White, it's that you need to insert TX, at the beginning of each line. And there's a regular expression pattern for that:

bash tx-dr.sh | sed s/^/TX,/